Introduction

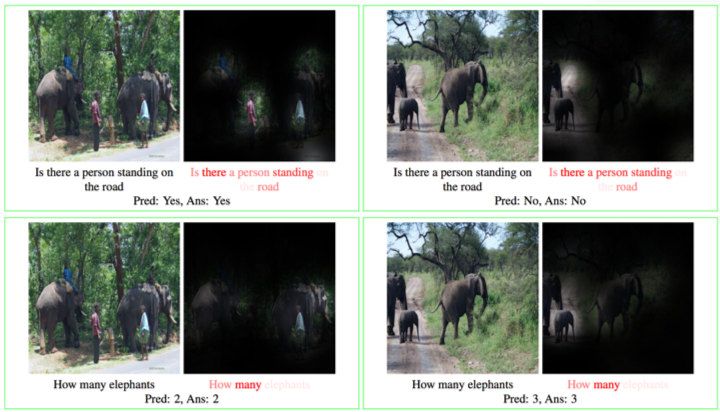

We study methods to understand the visual information on an image using natural language. For example, we proposed a method that takes an image and a natural language question about the image and provide an accurate natural language answer as the output. In this task, capturing the relationship between a question and visual information is important to achieve good performance. We propose a novel method using an attention mechanism to capture the relationship between natural language and vision.

References

Van-Quang Nguyen, Masanori Suganuma, Takayuki Okatani: Look Wide and Interpret Twice: Improving Performance on Interactive Instruction-following Tasks, International Joint Conference on Artificial Intelligence (IJCAI), 2021 [PDF]

Van-Quang Nguyen, Masanori Suganuma, Takayuki Okatani: Efficient Attention Mechanism for Visual Dialog that can Handle All the Interactions between Multiple Inputs, European Conference on Computer Vision (ECCV), 2020 [PDF]

Duy-Kien Nguyen and Takayuki Okatani, Multi-task Learning of Hierarchical Vision-Language Representation, IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019 [PDF]

Duy-Kien Nguyen and Takayuki Okatani, Improved Fusion of Visual and Language Representations by Dense Symmetric Coattention for Visual Question Answering, IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018 [PDF]