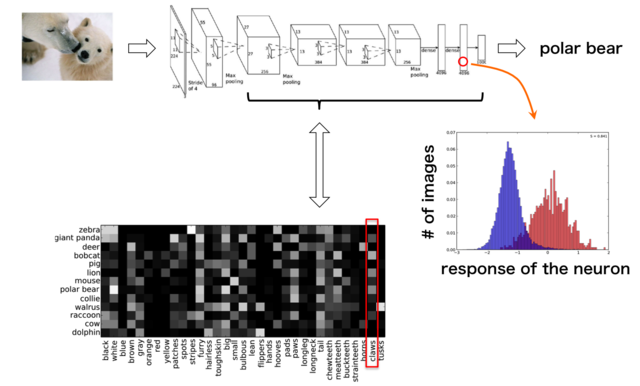

It has been recently reported that convolutional networks show good performances in many image recognition tasks. They significantly outperform the previous approaches that are not based on neural networks particularly for object category recognition. These performances are arguably owing to their ability of discovering better image features for recognition tasks through learning, resulting in the acquisition of better internal representations of the inputs. However, in spite of the good performances, it remains an open question why the convolutional networks work so well and/or how they can learn such good representations. In this study, we conjecture that the learned representation can be interpreted as category-level attributes. We conducted several experiments by using the dataset AwA (Animals with Attributes) to examine this conjecture, and obtained promising results. We first report that in a convolutional network trained for category classification task, there automatically emerge a set of neurons that can predict some attributes fairly accurately. It is more natural to think that the trained convolutional network discovers attributes that are semantic or even non-semantic (or difficult to represent as a word). To explore this possibility, we perform zero-shot learning by regarding the output of a upper layer as attributes describing the categories. The result shows that it attains the state-of-the-art classification accuracy, which also confirm the correctness of the above conjecture.

Understanding Convolutional Neural Networks in Terms of Category-level Attributes, Makoto Ozeki and Takayuki Okatani, Proceedings of Asian Conference on Computer Vision (ACCV) (2014) [PDF]